OpenAI, Google, and other tech companies train their chatbots with massive amounts of data drawn from books, Wikipedia articles, news, and other online sources. But in the future, they hope to use something called synthetic data.

This is because tech companies may exhaust the high-quality text the Internet has to offer for AI development. And the companies face copyright lawsuits from writers, news organizations and computer programmers for using their works without permission. (In one such lawsuit, the New York Times sued OpenAI and Microsoft.)

Synthetic data, they believe, will help reduce copyright issues and boost the supply of educational materials needed for artificial intelligence. Here’s what you need to know about it.

What is synthetic data?

It is data generated by artificial intelligence.

Does this mean tech companies want AI to be trained by AI?

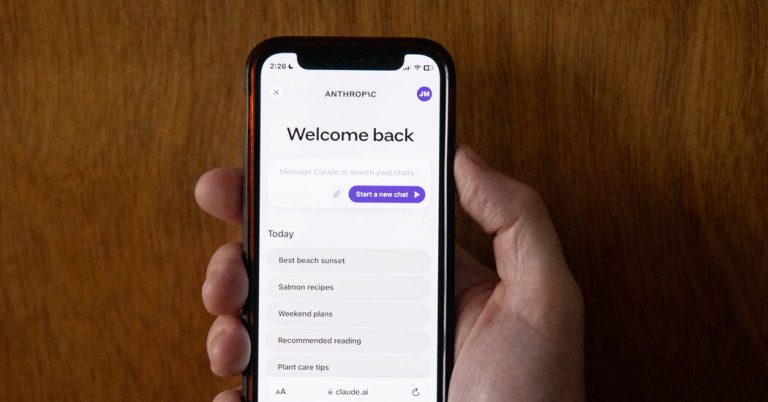

Yes. Instead of training AI models with text written by humans, tech companies like Google, OpenAI, and Anthropic hope to train their technology with data generated by other AI models.

Does synthetic data work?

Not exactly. AI models get things wrong and make things up. They have also shown that they are aware of the biases that appear in the Internet data they have been trained on. So if companies use AI to train AI, they can end up reinforcing their own flaws.

Is synthetic data widely used by tech companies right now?

No. Tech companies are experimenting with this. But because of the potential flaws of synthetic data, it’s not a big part of how AI systems are built today.

So why are tech companies saying synthetic data is the future?

Companies believe they can improve the way synthetic data is created. OpenAI and others have explored a technique where two different AI models work together to create synthetic data that is more useful and reliable.

An AI model generates the data. A second model then judges the data, just as a human would, deciding whether the data is good or bad, accurate or not. AI models are actually better at judging text than typing it.

“If you give technology two things, it’s pretty good to choose which one is better,” said Nathan Lile, CEO of artificial intelligence start-up SynthLabs.

The idea is that this will provide the high-quality data needed to train an even better chatbot.

Does this technique work?

About. It all boils down to this second AI model. How good is it at text judgment?

Anthropic has been the most vocal about its efforts to make this work. It improves the second AI model using a “constitution” curated by the company’s researchers. This teaches the model to choose text that supports certain principles, such as liberty, equality and fraternity or life, liberty and personal security. Anthropic’s method is known as “Constitutional AI”

Here’s how two AI models work in parallel to produce synthetic data using a process like Anthropic’s:

Even so, humans are needed to ensure that the second AI model stays on track. This limits how much synthetic data this process can generate. And researchers disagree about whether a method like Anthropic’s will continue to improve AI systems.

Does synthetic data help companies circumvent the use of copyrighted information?

The artificial intelligence models that generate synthetic data were themselves trained on human-generated data, much of which was copyrighted. So copyright holders still argue that companies like OpenAI and Anthropic used copyrighted text, images and videos without permission.

Jeff Clune, a computer science professor at the University of British Columbia who previously worked as a researcher at OpenAI, said AI models could eventually become more powerful than the human brain in some ways. But they will because they learned from the human brain.

“To borrow from Newton: AI sees further by standing on the shoulders of giant human datasets,” he said.