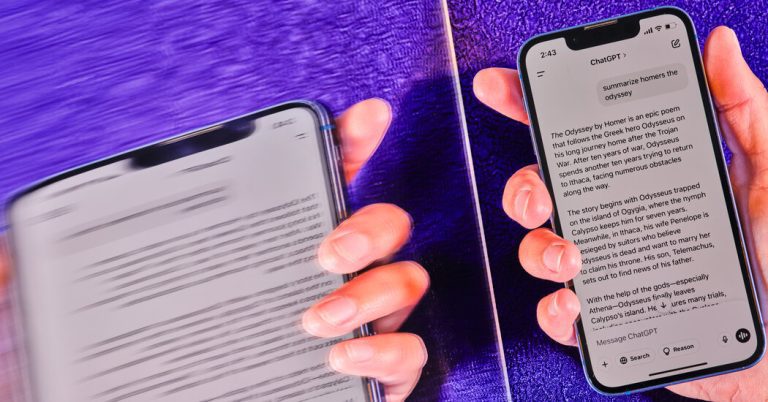

In September, Openai unveiled a new version of Chatgpt designed to justify through mathematics, science and computer programming. Unlike previous versions of Chatbot, this new technology could spend “thinking” time through complex problems before it is installed in an answer.

Soon, the company said its new technology of reasoning had surpassed industry’s top systems in a series of tests that were monitoring the progress of artificial intelligence.

Now other companies, such as Google, Anthropic and Deepseek in China, offer similar technologies.

But can it be the reason like a man? What does it mean to think a computer? Are these systems really approaching true intelligence?

Here’s a guide.

What does it mean when a system AI reason?

Reasoning simply means that Chatbot spends some extra time working on a problem.

“The reasoning is when the system does extra work after the question,” said Dan Klein, a professor of computer science at the University of California, Berkeley and the head of Scaled Cognition technology, an AI start.

Can break a problem in individual steps or try to solve it through test and error.

The original chatgpt immediately answered the questions. New reasoning systems can operate through a problem for a few seconds – or even minutes – before answering.

Can you be more specific?

In some cases, a reasoning system will improve his approach to a question, repeatedly trying to improve the method he has chosen. Other times, he may try different different ways of approaching a problem before he is installed in one of them. Or he can come back and check some work he did a few seconds before, just to see if it was right.

Basically, the system tries everything it can to answer your question.

This is like a school student struggling to find a way to solve a mathematical problem and turn many different options on a sheet of paper.

What kind of questions require an AI system for logic?

It may be possible for anything. But reasoning is more effective when asking questions about mathematics, science and computer programming.

How is a chatbot reasonable from previous chatbots?

You could ask the previous chatbots to show you how they had reached a specific answer or to check their own work. Because the original chatgpt had learned from the text on the internet, where people showed how they had gotten an answer or checked their job, it could also do this kind of reflection.

But a reasoning system goes further. He can do these things without being asked. And he can do it in more extensive and complex ways.

Companies call it a system of reasoning because it feels like it works more like a person who thinks through a harsh problem.

Why is AI logic important now?

Companies like Openai believe that this is the best way to improve their chatbots.

For years, these companies have been based on a simple concept: the more data on the internet were drawn to their chatbots, the better the systems were executed.

But in 2024, they used almost all the text on the internet.

This meant that they needed a new way of improving their chatbots. So they began to build reasoning systems.

How do you create a reasoning system?

Last year, companies like Openai began to lean on a technique called aid learning.

Through this process – which can be extended to months – an AI system can learn behavior through extensive test and error. By working through thousands of mathematical problems, for example, he can find out which methods lead to the correct answer and which do not.

Researchers have designed complex feedback mechanisms that show the system when it has done something right and when it has done something wrong.

“It’s a bit like training a dog,” said Jerry Tworek, researcher Openai. “If the system does well, you give it a cookie. If it doesn’t do it well, you say,” bad dog “.

(The New York Times sued Openai and her partner, Microsoft, in December for copyright violations of AI Systems.)

Does the aid learning work?

It works quite well in some areas, such as mathematics, science and computer programming. These are areas where companies can clearly define good behavior and evil. Mathematical problems have definitive answers.

Aid learning does not work in areas such as creative writing, philosophy and ethics, where the distinction between good and evil is more difficult. Researchers say this process can generally improve the performance of an AI system, even when answering questions outside mathematics and science.

“He gradually learns which standards of reasoning lead it in the right direction and do not do it,” said Jared Kaplan, head of science at Anthropic.

Are the aid and reasoning systems the same thing?

No. Aid learning is the method used by companies to build reasoning systems. It is the training stage that ultimately allows chatbots to be accountable.

Are reasoning systems still make mistakes?

Absolutely. Everything a chatbot does is based on chances. It chooses a path that is more like the data it has learned – whether these data came from the internet or created through the aid learning. Sometimes he chooses a choice that is wrong or does not make sense.

Is this a path to a machine that fits human intelligence?

AI experts are divided into this question. These methods are still relatively new and researchers are still trying to understand their limits. In the AI field, new methods often go very quickly at the beginning before they slow down.